Social media looks free and open on the surface. But behind your screens, artificial intelligence makes countless silent decisions about what you can and cannot say online.

Right now, AI systems filter through millions of posts, deciding their fate in milliseconds. These invisible algorithms shape online conversations in ways most users don’t notice.

As tech giants roll out more sophisticated moderation tools in 2025, the line between protection and censorship grows thinner. From automated content flags to shadow bans, this in-depth look reveals the hidden systems controlling your voice online.

The real power behind social platforms lies not with users or even human moderators, but with lines of code making split-second choices about acceptable speech.

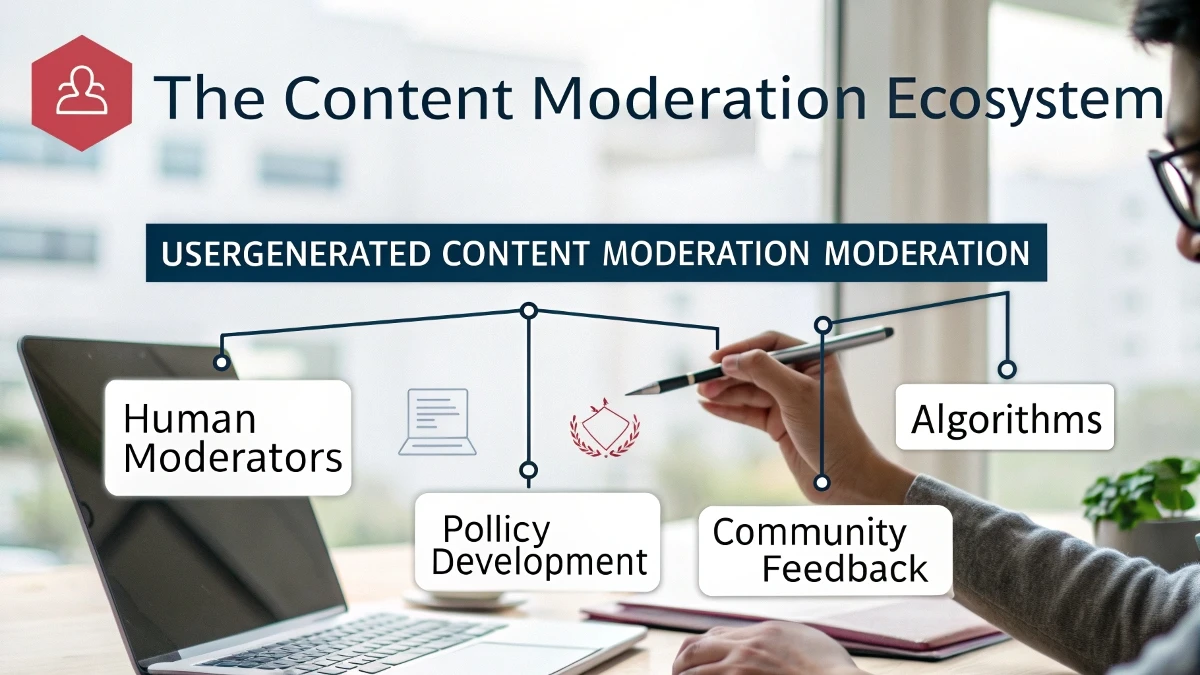

The Content Moderation Ecosystem

Social media platforms process billions of pieces of content daily through intricate AI moderation systems. Facebook alone reviews over 500 million posts every 24 hours using automated systems. These moderation frameworks combine multiple AI models working in parallel to assess text, images, videos, and audio against established policy guidelines.

The backend infrastructure supporting these systems involves distributed computing networks processing content through tiered evaluation stages. At the first tier, basic pattern matching and keyword filtering catch obvious violations. The second tier uses more sophisticated natural language processing and computer vision models to analyze context and nuance. The final tier often involves human review for borderline cases or appeals.

Major tech companies build proprietary moderation systems while licensing specialized tools from companies like Hive, Clarifai, and Two Hat Security. These third-party providers offer APIs that integrate with existing content workflows. The moderation landscape has evolved into a complex ecosystem where different vendors focus on specific types of harmful content – some specialize in hate speech detection, others in CSAM identification, and others in spam and bot detection.

Technical Implementation

Modern content moderation relies heavily on transformer-based language models fine-tuned on platform-specific data. These models use attention mechanisms to understand the context and detect subtle policy violations that simple keyword matching would miss. For image and video content, convolutional neural networks trained on massive datasets identify problematic visual elements with increasing accuracy.

The technical architecture typically involves multiple specialized models working together. One model might focus on toxicity detection, another on hate speech identification, and others on spam detection or bot activity patterns. These models feed their outputs into an orchestration layer that makes final decisions based on configurable policies. Platforms implement different thresholds for different types of content and different user contexts.

The backend systems use sophisticated caching and load balancing to handle massive throughput requirements. Content gets processed through geographic points of presence to minimize latency. As Sam Altman noted in his 2024 Stanford speech: “The challenge isn’t just building accurate models, it’s building systems that can make millions of decisions per second while maintaining consistent policy enforcement across global user bases.”

Platform-Specific Approaches

TikTok uses a multi-stage filtering system called “Project Clover” that processes videos through several AI models in parallel. The first stage analyzes audio transcripts for policy violations while computer vision models simultaneously scan visual content. A separate model evaluates video metadata and user interaction patterns. The system makes decisions in under 100 milliseconds by running these analyses concurrently on specialized hardware.

X (formerly Twitter) adopted a hybrid approach combining automated filtering with community notes and user reporting. Their system assigns trust scores to users based on their reporting accuracy history. The platform implemented a new appeals process in 2024 where contested moderation decisions get reviewed by both AI systems and human moderators. According to internal data, this reduced false positive rates by 23% while maintaining quick response times.

Meta built an integrated moderation framework called “Sentinel” that spans Facebook, Instagram, and Threads. The system shares threat intelligence across platforms while maintaining separate policy enforcement for each. It uses federated learning to improve moderation models without centralizing sensitive user data. The framework includes specialized modules for different content types: one for memes and image macros, another for live video streams, and others for encrypted messages and private groups. Their system processes over 100 terabytes of content daily with a reported 99.5% precision rate on high-risk violations.

RELATED:

Key Challenges and Debates

The tension between swift content removal and preservation of legitimate speech creates persistent challenges for AI moderation systems. When platforms increase automated filtering to catch harmful content quickly, they often see a corresponding rise in false positives that incorrectly remove valid content. A recent MIT study found that between 8% and 12% of appealed content removals were later reversed across major platforms, indicating significant error rates in automated systems.

Training data bias emerges as a critical issue in AI moderation. Models trained primarily on English language content from Western sources often misinterpret cultural contexts and linguistic nuances from other regions. For instance, certain Arabic phrases get incorrectly flagged at higher rates due to limited training data understanding of cultural contexts. Mark Zuckerberg addressed this in a 2024 blog post stating: “Building truly fair AI moderation requires us to understand thousands of cultural contexts, something even humans struggle with.”

The technical complexity of context-aware moderation poses significant challenges. An AI system must distinguish between news reporting about terrorism versus terrorist content, educational medical content versus adult material, or artistic expression versus policy violations. Modern transformer models improve contextual understanding but still make errors that human moderators would easily avoid. Platforms implement sophisticated appeal mechanisms, but the initial automated decisions can still cause temporary censorship of legitimate content.

Impact Analysis

The widespread deployment of AI content moderation has fundamentally altered online discourse patterns. Users adapt their language and behavior to avoid automated flags, sometimes leading to creative workarounds that make genuine policy enforcement more difficult. This creates an ongoing technical arms race as moderation systems evolve to detect evasion tactics while users develop new ones.

Business implications ripple throughout the digital economy. Content creators must navigate complex and sometimes opaque moderation systems to maintain their online presence. Small platforms struggle to implement effective moderation due to high costs and technical complexity. This strengthens the market position of large tech companies that can afford sophisticated in-house moderation systems. The moderation technology market has grown into a multi-billion dollar industry with specialized vendors competing to provide increasingly sophisticated tools.

Legal frameworks strain to keep pace with technological capabilities. Different jurisdictions impose varying requirements for content removal, creating complex compliance challenges for global platforms. The EU’s Digital Services Act mandates specific moderation transparency requirements, while other regions maintain different standards. Platforms must balance these competing legal obligations while maintaining consistent global policies.

Future Developments

Next-generation moderation systems will likely incorporate more sophisticated multi-modal understanding. Rather than processing text, images, and video separately, new architectures will evaluate content holistically. These systems will better understand memes, interpret satire, and grasp cultural references. Advanced language models will improve understanding of context and nuance across hundreds of languages.

Blockchain-based decentralized moderation systems are emerging as potential alternatives to centralized platform control. These systems use distributed consensus mechanisms to make moderation decisions, potentially reducing the power of any single entity to control online speech. Several experimental platforms launched in 2024 use this approach, though they face significant scalability challenges.

The regulatory landscape continues evolving rapidly. New proposals focus on algorithmic transparency, requiring platforms to explain moderation decisions and provide detailed appeal processes. Technical solutions for privacy-preserving moderation are advancing, with federated learning and secure enclaves enabling better moderation of encrypted content without compromising user privacy. As Bill Gates noted in his 2025 annual letter: “The future of online safety depends on finding the right balance between powerful AI tools and human oversight, all while preserving the open character of the internet.”

RELATED:

Love In The Age of AI: How Algorithms Are Rewriting Romance In 2025 – Is Your Soulmate a Robot?

Tired of 9-5 Grind? This Program Could Be Turning Point For Your Financial FREEDOM.

This AI side hustle is specially curated for part-time hustlers and full-time entrepreneurs – you literally need PINTEREST + Canva + ChatGPT to make an extra $5K to $10K monthly with 4-6 hours of weekly work. It’s the most powerful system that’s working right now. This program comes with 3-months of 1:1 Support so there is almost 0.034% chances of failure! START YOUR JOURNEY NOW!