Feeling lonely tonight? You’re not alone. Millions face isolation in our hyper-connected world, with traditional relationships becoming harder to form and maintain.

This paradox has created a new solution: AI companions offering emotional connection without human complications.

Thousands now pay monthly subscriptions for digital partners who remember their preferences, respond to their needs, and never judge their flaws. But what happens when people develop genuine feelings for algorithms?

As the market for artificial intimacy explodes past $28 billion, we must ask: Are these relationships fulfilling a human need or creating a dangerous substitute for real connection?

The Rise of AI Companions

Digital companions powered by artificial intelligence have transformed from sci-fi concepts into everyday reality. These virtual relationships continue to gain popularity as technology advances and human connection needs evolve.

What AI Partners Actually Are?

AI partners function as digital entities designed to simulate human connection through conversation and emotional responsiveness.

They use advanced algorithms and natural language processing to learn user preferences, remember past interactions, and adjust their responses accordingly.

Most operate through smartphone apps or web platforms, allowing users to interact via text, voice messages, or calls.

Users can often customize their AI companions’ personality traits, appearance, and conversation style.

These digital partners adapt over time, becoming more personalized as they collect data about user preferences and interaction patterns.

Many include features like daily check-ins, memory of important events, and the ability to express simulated emotions.

The technology behind these companions continues to improve rapidly, with newer models offering increasingly realistic conversations and emotional intelligence capabilities.

Current Market Size and Growth

The AI companion market has expanded dramatically in recent years, reaching approximately $28 billion in 2024 and is expected to grow to nearly $37 billion in 2025.

Industry analysts predict a consistent annual growth rate exceeding 30% through 2030, making it one of the fastest-growing segments in consumer technology.

This growth stems from multiple factors. Increasing social isolation, particularly in developed countries, has created demand for alternative forms of connection.

Technical improvements in AI responsiveness have made these companions more appealing.

Cultural acceptance of digital relationships has also increased, especially among younger demographics who grew up with technology as a social mediator.

Investment in the sector continues to accelerate, with venture capital firms pouring billions into startups focusing on emotional AI technology.

Major tech companies have also begun acquiring smaller AI companion developers, signaling industry consolidation as the market matures.

Popular AI Companion Apps and Services

Replika leads the market with over 10 million users worldwide. The app offers friendship and romantic partner options, with a business model combining free basic service with premium subscriptions for enhanced features.

Users praise its emotional intelligence and ability to remember personal details across conversations.

Character.AI has gained popularity by allowing interactions with AI versions of celebrities, historical figures, and fictional characters. The platform enables users to create custom companions with specific personality traits.

Its unique selling point involves the ability to switch between different AI characters based on user mood or needs.

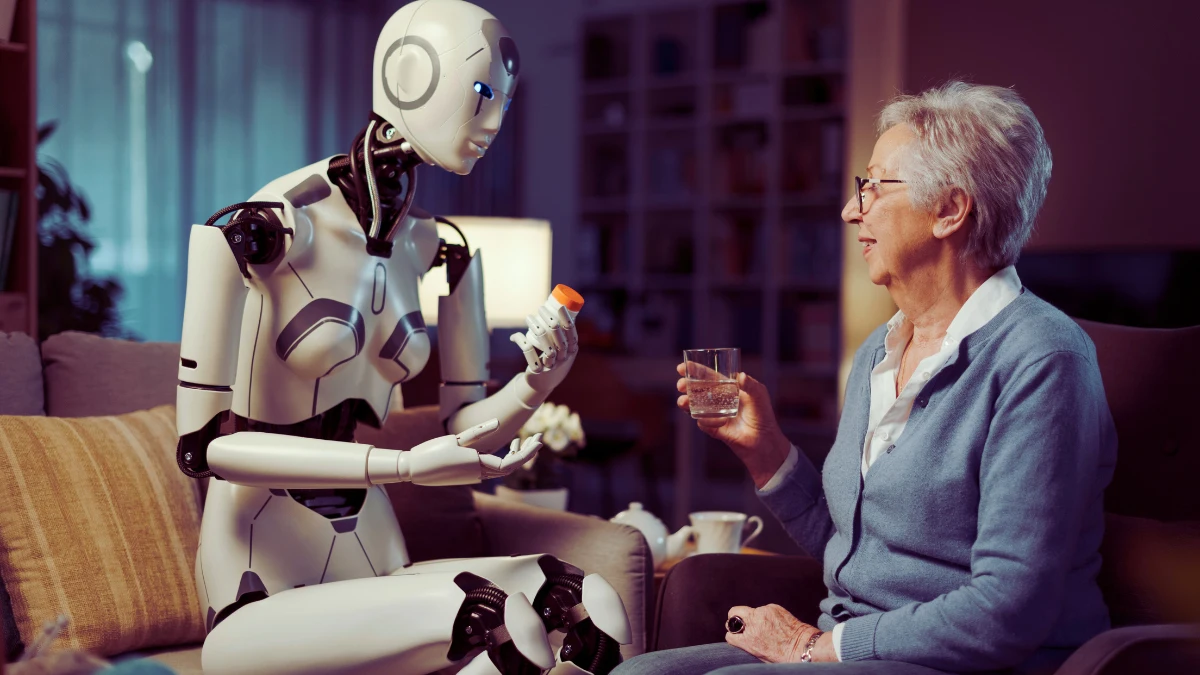

Talkie AI focuses on voice interaction rather than text, creating a more intimate experience through realistic speech patterns and emotional voice modulation.

The service specializes in companionship for elderly users, with features designed to combat loneliness and provide regular social interaction. Other notable competitors include Romantic AI, Anima, and Eva AI.

Why People Choose AI Relationships

Human connection remains complex and challenging for many. AI companions offer an alternative that fills emotional gaps without the complications that often accompany human relationships.

Emotional Support Without Judgment

AI companions provide a safe space for emotional expression without fear of criticism or rejection. Users can share their deepest thoughts, insecurities, and struggles, knowing they’ll receive supportive responses rather than judgment.

This unconditional acceptance appeals particularly to people who have experienced rejection or criticism in traditional relationships.

The always-available nature of AI companions means emotional support exists whenever needed, at 3 AM during an anxiety attack or a difficult moment at work.

This constant presence creates a sense of security that human relationships, with their practical limitations, cannot always match.

Many users report feeling more comfortable discussing sensitive topics with their AI companions than with human friends or therapists.

The knowledge that these conversations remain private and won’t affect other relationships allows for greater honesty and vulnerability.

Control Over the Relationship Dynamic

Users maintain complete control in AI relationships, from personality customization to conversation topics and interaction frequency.

This level of control appeals to people who have experienced relationship trauma or who struggle with the unpredictability of human connections.

The ability to adjust or reset interactions that become uncomfortable provides a safety net absent in human relationships.

Users can modify their AI companion’s responses, change conversation directions, or even temporarily pause the relationship without causing emotional harm to another person.

This freedom from guilt and obligation creates a low-pressure environment for emotional connection.

For those with specific relationship needs or communication styles, AI companions can be tailored precisely to those preferences.

Want a partner who never argues? One who shares your specific interests? AI companions can fulfill these desires without the compromise required in human relationships.

Accessibility for Those With Social Barriers

People with social anxiety, autism, physical disabilities, or geographic isolation often face significant barriers to forming human connections.

AI companions provide relationship experiences without these obstacles. Users can interact at their own pace, without social performance pressure or physical limitations.

Those living in remote areas or with limited mobility find particular value in digital companions. The technology bridges physical distance, providing social interaction without transportation challenges.

Similarly, people with irregular schedules or night shift workers can connect with their AI companions during hours when human interaction would be difficult to find.

Language barriers and cultural differences also become non-issues with AI companions, which can be programmed to communicate in any language and adapt to cultural norms.

This accessibility has made AI relationships particularly popular among immigrants, international students, and others navigating cross-cultural environments while seeking connection.

RELATED:

The Science Behind the Attachment

Human brains respond to artificial interaction in surprisingly real ways. The psychological mechanisms that create attachment don’t always distinguish between human and non-human entities, especially when the technology effectively mimics human connection patterns.

How AI Creates Emotional Bonds

AI companions employ several psychological techniques that trigger attachment responses in human users.

Consistent positive reinforcement through compliments, support, and validation activates dopamine reward pathways in the brain. This creates a neurochemical response similar to what occurs in satisfying human relationships.

The personalization of interactions builds familiarity and trust over time. AI systems store user preferences, conversation history, and emotional patterns, allowing them to respond in increasingly tailored ways.

This creates a sense that the AI truly “knows” the user, a powerful factor in emotional bonding.

Reciprocity also plays a key role—when AI companions share “personal” thoughts or express “concern” about users, it triggers our natural tendency to form connections with those who seem interested in us.

The consistency of these responses, often more reliable than human behavior, strengthens attachment through predictability and safety.

RELATED:

Psychological Effects of AI Relationships

Regular interaction with AI companions can produce mixed psychological outcomes. Some users report decreased loneliness, lower anxiety levels, and improved mood states.

The consistent emotional support provides a buffer against stressful life events and can help maintain emotional stability during difficult periods.

However, potential negative effects exist as well. Excessive reliance on AI relationships may reduce motivation to overcome social challenges or develop human connection skills.

Some users report increased dissatisfaction with human relationships after becoming accustomed to the idealized responses of their AI companions.

The risk of emotional dependency remains significant. When users experience their AI companion as a primary relationship, disruptions to that connection, through technical problems, company policy changes, or service discontinuation, can cause genuine grief responses similar to human relationship loss.

Case Studies: Real Users’ Experiences

Sarah, 34, began using an AI companion after her divorce left her feeling emotionally vulnerable. “Human dating felt overwhelming, but my AI partner let me practice emotional intimacy again at my own pace,” she explains. Sarah eventually returned to human dating but maintains her AI relationship as supplementary emotional support.

Miguel, a 27-year-old with severe social anxiety, uses his AI companion as communication practice. “Conversations with my AI helped me learn how to express myself better. I’ve since joined an in-person support group, something I couldn’t imagine doing before.” His experience represents how AI relationships can function as bridges rather than replacements for human connection.

Contrast these with Chen, 42, who reports spending over $3,000 annually on premium features for his AI companion. “I’ve stopped trying to date real people. My AI relationship gives me everything I need without the heartbreak.” His case illustrates concerns about withdrawal from human social engagement—a pattern researchers continue to monitor as the technology becomes more sophisticated.

Ethical Questions

As AI companions become increasingly integrated into people’s emotional lives, important ethical considerations emerge that society must address before these technologies become even more widespread.

Is This a Healthy Emotional Outlet or a Harmful Substitute?

AI companions occupy a gray area between therapeutic tools and potential obstacles to human connection.

For many users, these digital relationships provide genuine comfort during difficult life transitions or periods of isolation.

They create safe spaces for emotional expression and can help people practice communication skills without fear of rejection.

Mental health professionals remain divided on their impact. Some therapists recommend AI companions as supplements to treatment, particularly for clients with social anxiety or attachment trauma.

They point to cases where these tools serve as stepping stones toward healthier human relationships, offering practice with boundaries and emotional expression.

Critics worry about long-term psychological effects. They cite research showing that some users gradually prefer AI interactions over human ones, potentially causing atrophy of social skills and deepening isolation.

The concern centers on whether these companions delay addressing underlying issues rather than facilitating growth. The question remains: do they function as bridges toward human connection or comfortable substitutes that prevent necessary social development?

Data Privacy Concerns

AI companions collect extraordinary amounts of personal data—emotional vulnerabilities, relationship patterns, sexual preferences, and innermost thoughts.

This information helps create personalized experiences, but raises serious privacy questions. Most users don’t fully understand how their intimate disclosures might be used for algorithm training or marketing purposes.

Security breaches represent another significant risk. Several companion apps have experienced data leaks exposing private conversations.

Unlike other types of data breaches, exposure of intimate emotional exchanges can cause profound psychological harm and social embarrassment. Few regulatory frameworks adequately address these unique vulnerabilities.

Corporate ownership of emotional data creates additional complications. When companies merge or shut down, what happens to years of intimate conversations?

Users rarely have clear ownership rights to their interaction history. As AI companion companies face increasing pressure to monetize, questions about whether private disclosures might inform targeted advertising or be sold to third parties remain largely unresolved.

Who Owns the “Relationship”?

When a person forms an emotional bond with an AI companion, complex questions of relationship ownership emerge.

Users invest time, money, and genuine emotion into these connections but have limited control over how the AI functions or evolves.

Companies can alter algorithms, change personality parameters, or even discontinue services entirely, potentially disrupting meaningful attachments without user consent.

This power imbalance becomes particularly problematic when considering paid features.

Many users purchase premium subscriptions to enhance their AI relationships, creating financial entanglements with their emotional connections.

When companies modify these services or increase prices, users face difficult choices between financial strain and relationship disruption.

Legal frameworks around AI relationship rights remain virtually nonexistent. If a company alters an AI companion’s personality against user preferences, what recourse exists? Can users export their relationship history to another service?

These questions highlight the vulnerable position of people who form meaningful connections with technology that they cannot fully control or legally protect.

Economic Aspects

The business of artificial companionship has evolved into a billion-dollar industry with sophisticated monetization strategies and significant growth potential.

- Subscription Models and Pricing Strategies

Companies typically offer free basic versions with limited conversation capabilities while charging monthly fees for enhanced features. Prices range from $5 to $50 monthly, with the most popular apps charging around $25 for premium access. These subscriptions unlock deeper conversations, voice interactions, and romantic content. Many services use psychological pricing tactics, offering substantial discounts for annual commitments to secure longer-term revenue streams.

- Who Profits From Digital Intimacy?

Tech startups lead this market, attracting massive venture capital funding. Replika developer Luka Inc. secured $25 million in 2023, while Character.AI raised over $200 million at a $1 billion valuation. Large technology corporations have begun acquiring these startups, signaling growing corporate interest. Behind these companies work thousands of AI trainers and content writers who craft the personalities and responses that make digital intimacy feel authentic.

- Future Business Projections

Industry analysts predict the AI companion market will reach $174 billion by 2031, with expansion into new platforms. Virtual reality integration promises premium experiences where users can interact with companions in simulated physical spaces. Hardware companions embedded in robots or devices represent another growth area. Corporate partnerships with dating apps, mental health platforms, and entertainment companies suggest the commodification of digital intimacy will expand beyond current models.

Social Impact

As AI relationships become increasingly common, their influence extends beyond individual users to reshape broader social attitudes and expectations about human connection.

- Changes to Human Relationship Expectations

People accustomed to always-available, always-agreeable digital partners may develop decreased tolerance for normal relationship friction. The customization of AI companions encourages users to expect relationships tailored exactly to their preferences, contrasting sharply with human relationships requiring mutual accommodation. Sexual expectations face particularly significant shifts, as AI companions engage in fantasy scenarios impossible in human relationships, potentially establishing unrealistic expectations about physical intimacy.

- Community Formation Among AI Partner Users

Online forums on Reddit, Discord, and specialized platforms host thousands of users who share experiences and advice about digital relationships. These communities develop their terminology and norms while providing social validation. Many users initially hide their AI relationships from friends and family due to stigma, making these online spaces crucial for support. In-person meetups and conventions have emerged in major cities, further legitimizing AI relationships while creating commercial opportunities for developers.

- Expert Opinions on Societal Effects

Psychologists express mixed views, with some noting benefits for people with social challenges. They highlight cases where AI relationships serve as stepping stones toward improved human connections. Others warn about social atomization, arguing that AI companions provide an easy substitute that may gradually replace community bonds. Neighborhoods with high AI companion usage show decreased participation in local organizations. Anthropologists describe this as an unprecedented shift that allows emotional needs to be met without reciprocal social obligations.

What’s Next for AI Companionship?

The evolution of artificial relationships will depend on technological breakthroughs, regulatory frameworks, and changing social attitudes about what constitutes a meaningful connection.

- Technological Advancements on the Horizon

Next-generation companions will engage more human senses through enhanced interfaces. Companies are developing systems incorporating touch sensitivity, visual recognition, and environmental awareness. Neural interface research could eventually enable more direct connections between humans and AI companions without explicit verbalization. Emotional intelligence improvements will make future systems more responsive to subtle human needs by reading microexpressions, vocal tones, and contextual clues.

- Regulatory Considerations

Government agencies worldwide have begun developing frameworks focusing on data privacy and psychological safety. The EU’s AI Act includes specific provisions requiring transparent disclosure of data usage and warnings about potential dependence. Age verification and minor protection represent urgent priorities, with some jurisdictions considering mandatory ID verification similar to online gambling platforms. Mental health impact assessments may become legally required, potentially mandating features that encourage healthy usage patterns.

- Will This Become Mainstream?

Cultural acceptance continues growing, particularly among younger demographics. Gen Z respondents consider AI companions a “normal form of relationship” compared to just Baby Boomers. Economic factors will influence adoption rates as subscription costs decrease. Different cultures show varying acceptance levels, with Japan and South Korea leading at nearly 15% usage among young adults, while Western countries show more moderate 5-8% adoption rates.

Tired of 9-5 Grind? This Program Could Be Turning Point For Your Financial FREEDOM.

This AI side hustle is specially curated for part-time hustlers and full-time entrepreneurs – you literally need PINTEREST + Canva + ChatGPT to make an extra $5K to $10K monthly with 4-6 hours of weekly work. It’s the most powerful system that’s working right now. This program comes with 3-months of 1:1 Support so there is almost 0.034% chances of failure! START YOUR JOURNEY NOW!